How do images get converted to tensors in PyTorch?

How does an image get converted to vectors to be fed as input into a neural network? Essentially pixels in an image get transformed and normalised for efficient neural net processing. Here’s a detailed breakdown of the to_tensor(pic) method of PyTorch.

The method accepts 3 main types of image inputs: NumPy N-dimension array, PIL Image and accimage. There are some validation checks performs and each case is handled differently.

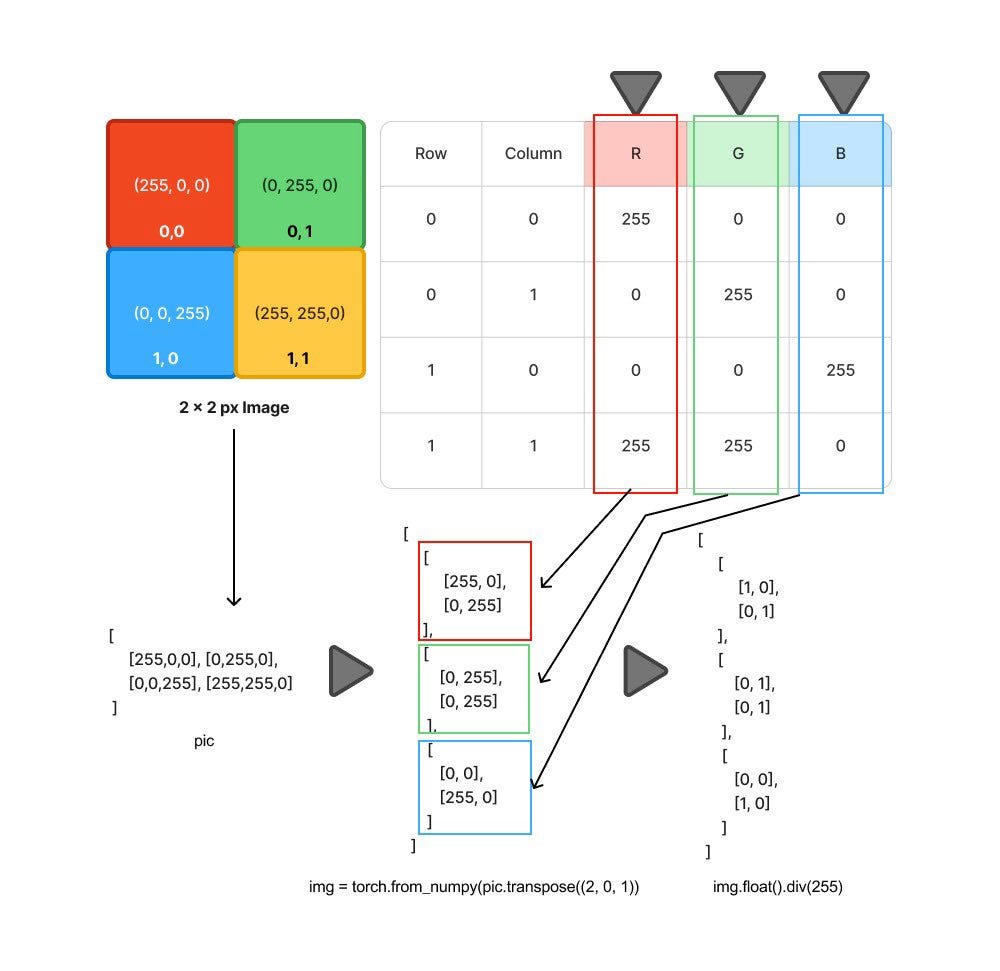

For N-dimension array it changes the order of dimensions from Height-Width-Channel to Channel-Height-Width (using pic.transpose((2, 0, 1))), converts it into a tensor (using torch.from_numpy) and normalizes the data (using img.float().div(255)) for efficient GPU computation

Here’s what the transformation looks like with a 2x2 image pixel with 3 channels RGB.

When the image type is accimage (https://github.com/pytorch/accimage) which is a special image type that can be used for faster image loading is handled separately and converted to a tensor.

First it creates a new empty numpy array to hold image data, copies the image data from accimage to numpy array (using pic.copyto(nppic)) and convert numpy array to torch tensor (using torch.from_numpy(nppic))

For PIL images, the raw pixel data isn’t directly exposed as a multi-dimensional array. Instead, the `.mode` attribute defines the pixel format and number of channels.

Some common modes include:

‘L’: 8-bit pixels, grayscale

‘I’: 32-bit signed integer pixels

‘I;16’: 16-bit unsigned integer pixels

‘YCbCr’: 3x8-bit pixels, luminance/chrominance color model, used by JPEG

With this info PIL images get converted to PyTorch tensor with the shape (height, width, channels), transposed is used to change the order of dimensions from Height-Width-Channel to Channel-Height-Width and normalize them for smoother processing.

The transpose method is the computation bottleneck because it breaks the sequential memory access patterns that CPUs are optimized for, leading to cache misses.